Apache Airflow Best Practices for Software Developers [2021]

The fast-paced software development brings a wealth of new platforms, as well as it continuously simplifies development processes, tools and solutions. Many of them appear and shine for a short time, solving a specific issue, and then vanish in the constantly changing requirements of the developer community.

However, the most performant and starring solutions, like Apache Airflow, are widely accepted and used for a long time, evolving with the ever changing software development environment. Let’s take a closer look at Apache Airflow best practices with Optimum Web experts.

What is Apache Airflow?

Apache Airflow is a modern open-source platform, written in Python, for managing programmatic workflows, especially complex tasks involving massive scripts execution. It covers all types of actions needed, from creating to scheduling and monitoring the workflows, but is mostly used for complex data pipelines architecting. Programming language, used in Apache Airflow, enables its users to integrate it with any third party API or database in Python to further extract or load a big amount of data.

The development world owes the appearance of the Apache Airflow to Airbnb and a major problem the company experienced in 2015. This was a period of the explosive growth of this homestays and tourism experience marketplace, that entailed the need to store and operate a huge amount of data, speedily increasing day by day. The situation was the reason the company employed a lot of data specialists, from engineers and analysts, to scientists, to handle this information properly. The work of all these people had to be coordinated, all the batch jobs they created had to be scheduled and the processes – automated. Thus the Airflow, that later joined the Apache Foundation Incubator and completed it as a project of the highest level after 3 years, was born. Today the majority of the big Data Engineering teams are using Apache Airflow, that is growing together with the community.

Due to the open-source nature of the platform, there exist multiple use-cases, that are documented and can be thoroughly studied in order to create something even more performant. Check below how you can apply the Airflow in real life.

Apache AirflowUse Cases

1. Spark. You can arrange and launch machine learning jobs, running on this analytics engine’s external clusters.

2. Salesforce. You have the possibility to aggregate the sales team updates daily, further sending regular reports to the company’s executives.

3. Data warehouse. You are enabled to periodically load website or application analytics data to the depository.

As we can see, Apache Airflow deservedly takes its place among the tools and platforms, widely used in modern software deployment.

Best Practices for Apache Airflow Deployment

It is common practice in today’s software deployment, the processes have to be simple and flexible, however, some procedures are mandatory and bring complexity. Check out the following checklist for an effortless process of software deployment.

Establish proper development processess

This is the first and foremost step, enabling you to reduce the deployment errors and issues, like code conflicts, overwriting problems and others. Set up control over your code, using specific tools, such as GitHub; create code repositories and divide your work in independent segments, like, for example, testing branch, development branch, bug fixing branch etc.

Leverage deployment automation

One of the simplest, yet most efficient measures in this list is to automate all the deployment steps that allow this. Thus you’ll create a recurring process, including all the necessary stages, that will only have to be monitored. Just imagine how much time this tip can save for you! Try such classical automation ways as a relevant script creation or tools like Jenkins or Apache Airflow.

Focus on building and packaging your application

Create a non-changeable and repetitive app for building and packaging in order to simplify the deployment process across all the environments you have. Do not forget that this measure is necessary even in case you have an automated deployment process.

Follow the standard deployment process

Keep up with the deployment stages, across all stages, including development, testing, staging and production whatever the environment. It will help you prevent issues like the application malfunction in some of the environments caused by setup and configuration discrepancies. The constant deployment process measure is also helpful for the sanity checks performed on the pre-production stage.

Choose frequent deployment of small pieces of code.

This step is designed to decrease the number and the reasons of issues and allows a more accurate testing, than in cases when you deploy big chunks of code and features simultaneously.

9 Benefits of Using Apache Airflow

Let’s now look at the Apache Airflow as an example of a deployment process smoothening solution . First of all we’ll have to define what makes it a great tool to use for data processing and check the more in-depth review of the best Apache Airflow practices. To define them, let’s dive deeper into the details of the platform’s working process.

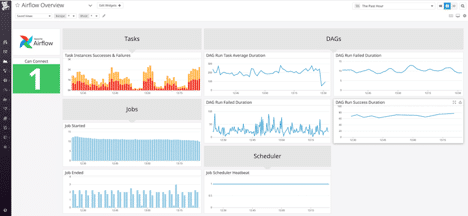

As long as this is a platform designed to automatically create, schedule and supervise workflows, you can use Apache Airflow to create work processes as coordinated acyclic graphs (DAGs) of jobs. The platform scheduler executes your assignments on a variety of workers while following the predefined conditions. Performant command line utilities simplify the complex tasks execution on DAGs. The multifunctional UI makes it simple to envision pipelines running in production, watch the progress, and investigate issues when required.

DAGs represent one of the workflow setup techniques. They are designed to arrange a series of operations that can be independently retried in case of collapse and restarted from the same place where it happened.

1. Workflow Management

Apache Airflow provides several programmatic workflow management setup methods. For example, you can instantly generate tasks within a DAG. In their turn, the XCom and the sub-DAGs enable you to build sophisticated dynamic workflows.

Don’t forget that the Airflow User Interface defines a set of connections and variables, based on which the dynamic DAGs can be established.

2. Code running operators automation

The list of the most widely used operators created to run code in Apache Airflow includes:

- PythonOperator, allowing a fast python code transfer to production

- PapermillOperator for an extension of Jupyter notebook, called Paperill, that is designed to parametrize and execute notebooks. The combination of Papermill and Airflow was even recommended by Netflix for notebook automatisation and deployment.

3. Task dependency handling

Apache Airflow is perfect for managing all sorts of dependencies through the concepts like branching. These can be DAG runs status and task completion, as well as file or particion presence.

4. Scalability

The extendable model of the Airflow allows it to expand across all the custom sensors, hooks and operators development stages.

Due to its open-source nature, Apache Airflow greatly benefits from multiple community contributed operators, written in different languages of programming, but built in using Python wrappers.

5. Supervision and managing interface

The Airflow interface for monitoring and tasks handling allows to exercise instant control of all the tasks’ current status. It also enables you to trigger DAGs runs and clear tasks.

6. Built-in retry policy

Apache airflow is dotated with a default auto-retry procedure, that can be configured through a range arguments, that can be passed to any operator, as those that are supported by the BaseOperator class: retries, retry_delays, retry_exponential_backoff, as well as max_retry_delay.

7. Interface designed to efficiently interact with logs

One of the Apache Airflow highest demanded features is a smooth access to the logs of every task, run through its web-UI. This makes the tasks debugging in production as easy as it can be.

8. Rest API

This API is irreplaceable when it comes to using external sources for workflows creation. Rest API makes it possible to create asynchronous workflows, using the same model, that is adopted for building pipelines.

9. Alert System

Apache Airflow has set default alerts for failed tasks. Usually it lets you know about them via email, but there is an option of getting alerts via Slack.

Given the information above, we tried to define the main benefits of the Apache Airflow platform for those who decide to use it. The most valuable features of the platform are:

- Python, that allows for building complicated workflows;

- easy to read UI, instantly delivering insights into the task status;

- multiple integrations, such as cloud tasks and functions, natural language, dataproc, amazon kinesis data firehose and sns, Azure files, Apache Spark, and many more;

- ease of use, making the workflow deployment accessible to anyone who knows Python;

- Open Source nature, providing access to a wealth of Apache Airflow community experience and expertise.

Apache Airflow foundation principles include the following: infinite scalability, dynamics, unlimited extensibility and unconditional elegance, that make it a good choice for developers, working with Python, who strive to deliver a perfectly working, neat and clear code.

Optimum Web offers